If you are in technology field, you cannot hope to escape the noise surrounding big data.

The buzz is getting louder and the discussion has changed from “Big Data, a hype” to “Big Data, a challenge” in just about 12 months. As put forward in many white papers and blogs written in the last 10 years, Big Data is definitely the new frontier of innovation and productivity growth, and for small and large businesses, private and public organisations, it has become imperative that Big Data is part of the overall business strategy

Now the question I get asked for companies is – how do we develop a Big Data Strategy?

I propose the following 6 steps (from an data analyst point of view):

Step 1: Comprehensive Data Inventory.

So I ask once again what is Big Data? Big Data is all about data and we could group them into three:

- Group 1: data that organisations have been collecting about their customers through billing, customer care, e-commerce transactions, emails, market surveys, and traditional sources such as ABS

- Group 2: those that are being volunteered on social media e.g. facebook and twitter

- Group 3: those that global organisations e.g. Google, facebook, Amazon, ebay have collected over the decade.

The first step at developing a Big Data Strategy is to fully understand what data the company holds. For many organisations, potentially, this is the first time a comprehensive data inventory is being done and discovering what and how much data the company has, can be a big eye opener. Focusing on customers, get hold of all customer related and data that can be linked to customers. Undertake data mining and discover what your data shows, do data visualisation as it is easier to see both regular and unusual patterns visually. To do the data discovery and visualisation processes, it is advisable to hire a data scientist/statistician from outside the company for two reasons: 1) for fresh set of eyes, free of internal biases and BAU processes and 2) to measure the statistical significance of observations.

Step 2: Strategic Business Requirements Gathering.

Data is used to inform business processes, decision, policy making and planning, therefore it is important to understand how the data is being used and who are the end-users. Go directly to the decision-makers, the C level executives, if you must, and you might find out that fundamental questions are being asked e.g. market share, price elasticity, customer value, customer profitability and correct market segmentation. Map those questions and define your Question-to-Answer strategy – what are the key questions being asked and how can these questions be answered. Would Group 1 data be sufficient to answer those questions or do you need to tap into Group 2, and Group 3? This step will also determine what types of statistical modelling need to be developed, which would ultimately define the type of software and infrastructure required.

Step 3: Existing IT Infrastructure and Software Inventory.

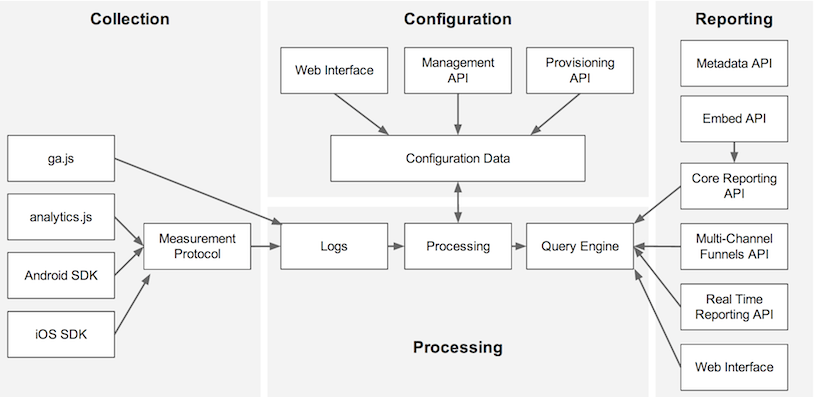

From learnings from steps 1 and 2, undertake IT infrastructure and applications inventory. Evaluate if your existing IT capability is up to scratch with what you want to do with your data. Your own IT department should be the source of intelligence for this exercise and if you have outsourced your IT, hire technology-agnostic consultants who are not only motivated to sell you their wares. The key point here is – don’t start buying new software and building new infrastructure until you have done steps 1, 2 and completed this step. Chances are you have so many software and systems but you are not Big Data ready. It is also possible that you have Big Data infrastructure in some form and depending on your business requirements – perhaps all you need is analytics capability to data mine, analyse and model. So potentially, you might only need to hire data scientists or statisticians who could work on structured and unstructured data.

Step 4: Build Business Case:

At this stage you should have gathered key inputs into your project business case – costs and benefits. Again, involve IT to design a prototype and from the above steps, determine your project cost. Once project costs are estimated, compare that with expected benefits e.g. cost savings, productivity growth or revenue generation. A strong ROI is the key to a strong business case to generate interests from potential sponsors.

Step 5: Develop Big Data Capability – Infrastructure, Metadata, Software, BI (Reporting).

This is the actual project management step which could potentially go between 12 to 18 months. Spending the first 6 months developing an end-to-end prototype is going to be critically worthwhile and cost effective – even if you have to stop the project!

Step 6: Build Analytics Capability (Hire).

Hire data scientists/statisticians. Loop back to data discovery. Spend a lot of time on data discovery which might involve data cleansing and transformation. Once you have fully understood your data, then and only then can you construct implementable segmentation, develop behavioural models and perform predictive, decision, and policy analytics.

For more information on Big Data Strategies contact killerdatablog@gmail.com